You might be using ksnip or flameshot to take screenshots of single or multiple web pages. No doubt, both are great tools that provide you with rich full features that make it easy to take screenshots and later edit the image.

Also Read: TextSnatcher: How to Copy Text from Images in Linux?

What if I told you about some hidden command-line tools that let you easily capture website screenshots from your terminal app? You heard it right. In this article, you will learn how to take a website screenshot from your terminal app in Linux.

1. Pageres: Capture Website Screenshots

Pageres is a great tool for taking hundreds of screenshots of multiple websites in less than a minute. All you have to do is pass the URL of the website and specify the variables that will allow you to specify the screenshot resolution, date, and time.

You need to make sure Snap is installed and running as a service on your system and then follow the below command to start the installation process.

$ sudo snap install pageres After the installation is complete, you can execute the following command to get a basic idea of how this application works.

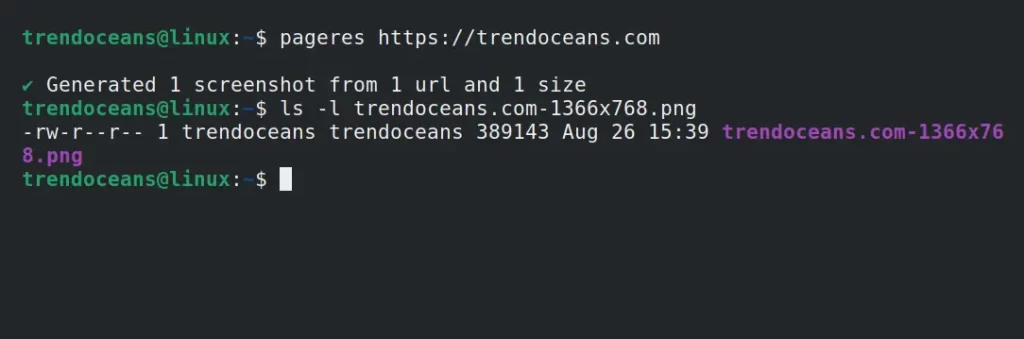

$ pageres https://trendoceans.comBelow is the output of the above command.

Without any parameters, it will capture the website screenshot in its default (1366×768) resolution. However, you can tweak this to a custom value by using the available variables as mentioned below.

Suggestion: How to Install and Use Homebrew Package Manager on Linux

url: The URL without protocol (https) and web (www), eg.https://trendoceans.io/blog/becomesyeoman.io!blogsize: Specified size, eg.1440x1080width: Width of the specified size, eg.1440height: Height of the specified size, eg.1080crop: Outputs-croppedwhen the crop option is truedate: The current date (YYYY-MM-DD), eg. 2021-08-26time: The current time (HH-mm-ss), eg. 21-15-11

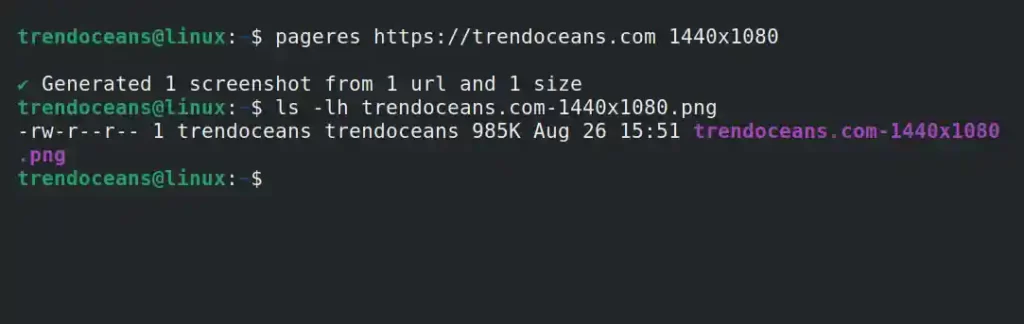

For example, to capture a webpage screenshot in the 1440x1080 resolution, use the following command.

$ pageres https://trendoceans.com 1440x1080Below is the output of the above command.

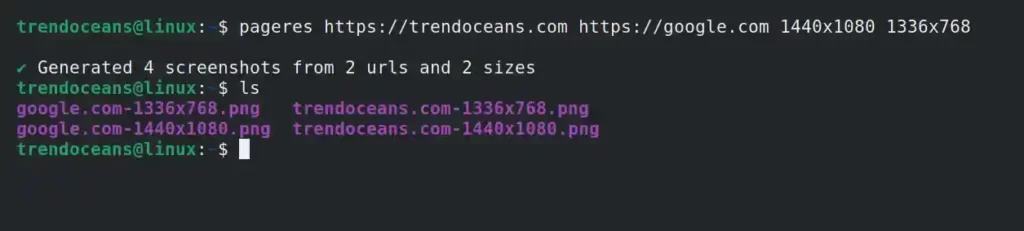

If you want to capture screenshots of multiple websites with multiple resolutions, then specify each one of them, as shown below.

$ pageres https://trendoceans.com https://google.com 1440x1080 1336x768Below is the output of the above command.

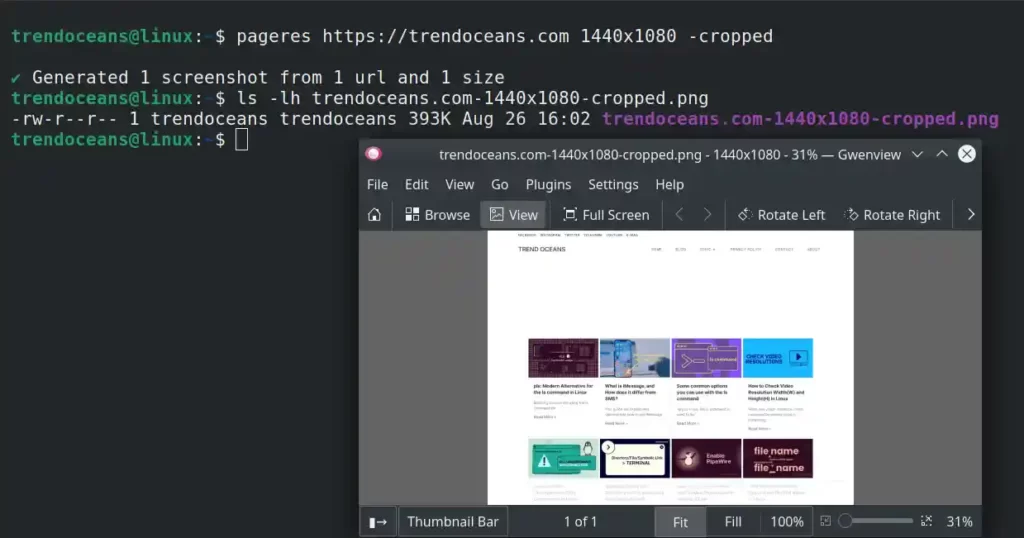

Right now, it is capturing the complete web page, although you can easily take a screenshot of the visible region of the page by using the -cropped flag, as shown below.

$ pageres https://trendoceans.com 1440x1080 -croppedBelow is the output of the above command.

To learn more, you can check the help section of this application by using the following command.

$ pageres -h2. Cutycapt: Capture WebKit’s Rendering of a Web Page

Cutycapt is an amazing open-source tool to capture webkit’s rendering of a web page into a variety of vector and bitmap formats like SVG, PDF, PS, PNG, JPEG, TIFF, GIF, and BMP.

It is available to install on Ubuntu or other distributions from the default repository, and even Kali Linux ships it as the default tool of its operating system.

Other users can execute the following command to install it on their Linux system.

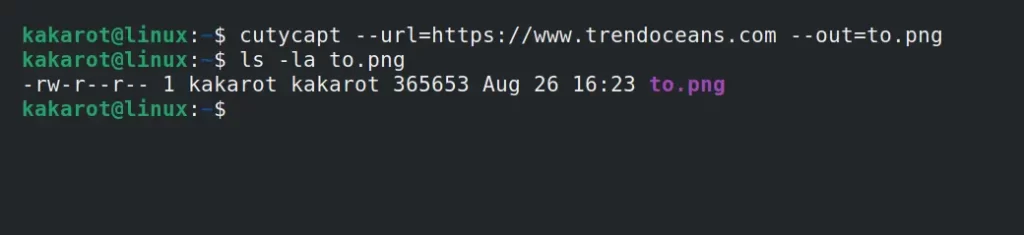

$ sudo apt install cutycaptAfter the installation is complete, you can capture the screenshot of a web page by specifying the URL with the --url option and save it with your choice of filename by providing the filename in the --out option, as shown below.

$ cutycapt --url=https://www.trendoceans.com --out=to.pngBelow is the output of the above command.

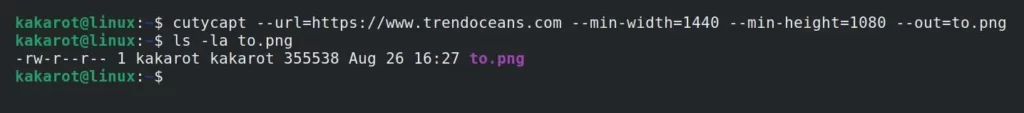

You can use --min-width and --min-height to specify a custom resolution for the screenshot, as shown below.

$ cutycapt --url=https://www.trendoceans.com --min-width=1440 --min-height=1080 --out=to.pngBelow is the output of the above command.

Furthermore, you can even set a delay time, javascript enable/disable, auto load image on/off, user-agent, etc. You can refer to the manual page to learn more about them.

$ man cutycaptI hope this article turned out to be useful for you. If you have any more questions, then do let us know in the comment section.

Thanks for your tutorial! I have tested pageres which works very well on an Ubuntu server without a GUI.

However, I can’t get it to work properly with a CRON task. If I am connected through SSH on the server when the task is triggered, it works, but if I am not connected, it does not work.

Do you have any idea why?